AI Ethics Unveiled: Navigating the Future of Responsible Technology

Artificial Intelligence, or AI, is a form of technology currently taking center stage in development discussions in almost every industry. In the corporate world, generative AI tools, such as ChatGPT, and Human Resources (HR) and interview processes powered by AI are both growing in popularity and usage.

With any new technology, especially in AI development, you have to weigh the moral principles involved and the broader ethical implications of how these tools operate. In this post, we’ll dive into the ethical concerns associated with AI and how you can best address them in your organization.

What is AI ethics?

AI ethics refers to the framework companies and individuals use to responsibly develop, deploy, and use AI. It helps ensure AI systems align with core business values, human rights, and societal well-being.

The goal is to maximize the benefits of this evolving technology while limiting the risks and adverse outcomes. For example, AI ethics can protect qualified job candidates applying for jobs by avoiding bias in AI recruitment tools that would otherwise unfairly reject them.

Because modern AI tools are so recent, and AI research continues to evolve at a staggering pace, the ethics, applications, and regulations surrounding the technology are still taking shape.

The EU Artificial Intelligence ActOpens in a new tab is one of the first official AI regulations describing what precautions organizations should take when using AI. The EU AI Act outlines key pillars, including autonomy and oversight, technical robustness, privacy, and fairness.

AI governance is still under development in most countries and regions, which means the private sector often sets its own expectations for responsible AI use. However, to avoid creating or exacerbating problems, it falls on businesses to handle AI responsibly until a more solid framework is in place.

Why is ethical AI important?

The ethics of AI matter because businesses are rapidly integrating the technology into core systems and processes, which makes ethical AI important to every major decision you make. As the technology's role in modern life expands, organizations and individuals have an obligation to consider the potential impacts of its use.

Maintaining a positive public perception

AI can make business operations more efficient and enable innovation, but it can also create problems. As Chapman University explains in ‘Bias in AI,’ training AI with flawed datasets and using it without proper oversight can have long-term effects on the outcomes it produces.

Over time, these types of errors will erode public trust and create a negative reaction to the technology. On the other hand, when businesses practice and promote AI ethics, they help ensure society can continue to benefit from AI-driven innovations without sacrificing the privacy, safety, and well-being of their employees and customers.

Preventing harm

Without proper monitoring, AI can cause actual harm, preventing people from accessing the services and support they need. AI systems are only as reliable as the data used to train them. Failing to uphold AI ethics through appropriate training and monitoring can lead to privacy violations, discrimination, and a wide range of societal risks.

AI ethics are also crucial from a legal standpoint. Companies need to understand the potential problems with the technology so they can take steps to prevent civil lawsuits. For example, Proskauer reports that a group of claimants has filed a class action case against the business software company Workday Inc. for its AI-powered applicant recommendation system.

What are the biggest ethical challenges with AI applications?

AI technology is a complex topic with many factors at play, but ethical AI practices generally boil down to five essential areas. Understanding these fundamental points will help you implement technology more responsibly within your organization.

Fairness in AI systems

Avoiding bias and ensuring equitable treatment in hiring, promotions, performance reviews, and other business operations is essential when using AI. Unfortunately, flaws in AI algorithms or in the data developers use to train them can result in unfair or discriminatory outcomes.

Without proper ethical controls, AI can worsen and automate many examples of bias in the workplace, including:

- Gender bias

- Ageism

- Name bias

- Confirmation bias

These biases could cause harm to prospective and current employees by restricting their opportunities or penalizing them without good reason. For example, a story from Reuters explores Amazon's discovery in 2015 of its AI recruiting engine being biased against women.

This occurred because the AI's training data featured predominantly male applicants. As a result, it was unfairly rejecting female candidates.

Companies found discriminating against protected classes because of AI biases could face serious repercussions, including civil suits and lasting damage to their reputation. Such AI models could also make the workforce less diverse, affecting the company's overall culture.

Transparency

When using artificial intelligence systems, you have to be open and honest about how they're influencing your decision-making processes. Otherwise, your AI-influenced decisions might seem arbitrary and unjustified. Make sure you can trace the origins of each decision, understand how the AI arrived at that conclusion, and explain the decision-making path to others.

Failing to be transparent about your AI use could cause consumers, partners, and investors to lose trust in your organization. This could make it more difficult for you to build and maintain relationships or secure contracts moving forward.

Accountability

If something goes wrong when your business uses AI, placing all the blame on the technology isn't an option. Organizations and developers must take responsibility for all outcomes when using these systems.

Before implementing AI in your business, think about who bears responsibility for the decisions it makes and how you'll respond if any of those decisions have negative consequences. In addition, consider what oversight mechanisms you can put in place to prevent issues from developing.

Autonomy

Giving AI control of your organization may be a tempting prospect, especially when you consider how much it can accelerate and streamline your operations. However, human agency and decision-making are still vital even in AI-driven processes.

AI can behave in unpredictable ways, with some outcomes potentially increasing your security risks. An autonomous system could make a costly error affecting your ability to operate. In the worst cases, an AI system managing critical tasks could take an entire segment of your operations offline.

Most importantly, AI isn't capable of human judgment and doesn't understand context. Unlike a human decision-maker, it doesn't factor in unique situations or your organization's mission, vision, and values into its decisions.

Keeping a human hand on the wheel maintains a sense of morality and empathy, both critical to running a successful business.

Societal risks

The importance of AI ethics extends beyond the walls of your organization. Failing to follow ethical principles when using this technology creates large-scale risks to your community and people around the world.

These are some of the biggest concerns:

- AI-driven misinformation: Some people use AI to create deepfakes — realistic, fabricated videos, images, and audio. This content can cause financial and reputational damage, spreading and seemingly confirming false narratives that have no basis in reality.

- Job displacement: Some sectors will experience significant job losses due to AI and automation, eliminating human positions and limiting employment prospects. While AI re-skilling can open doors to new opportunities, the cost, location, and time required for training can make it inaccessible to some people.

- Social inequality: AI can widen the wealth gap by putting lower-income individuals out of work while adding wealth to investors and leaders in technology. It can also worsen biases in areas such as hiring and lending, and generally disadvantage marginalized groups.

These problems have enormous ramifications for the future of the world. While a single business's decisions probably won't sway the outcomes in one way or another, the cumulative effect of every organization's and individual's choices when using AI will determine how the technology evolves moving forward.

For that reason, everyone has a responsibility to consider the potential adverse outcomes of AI and explore how to mitigate them.

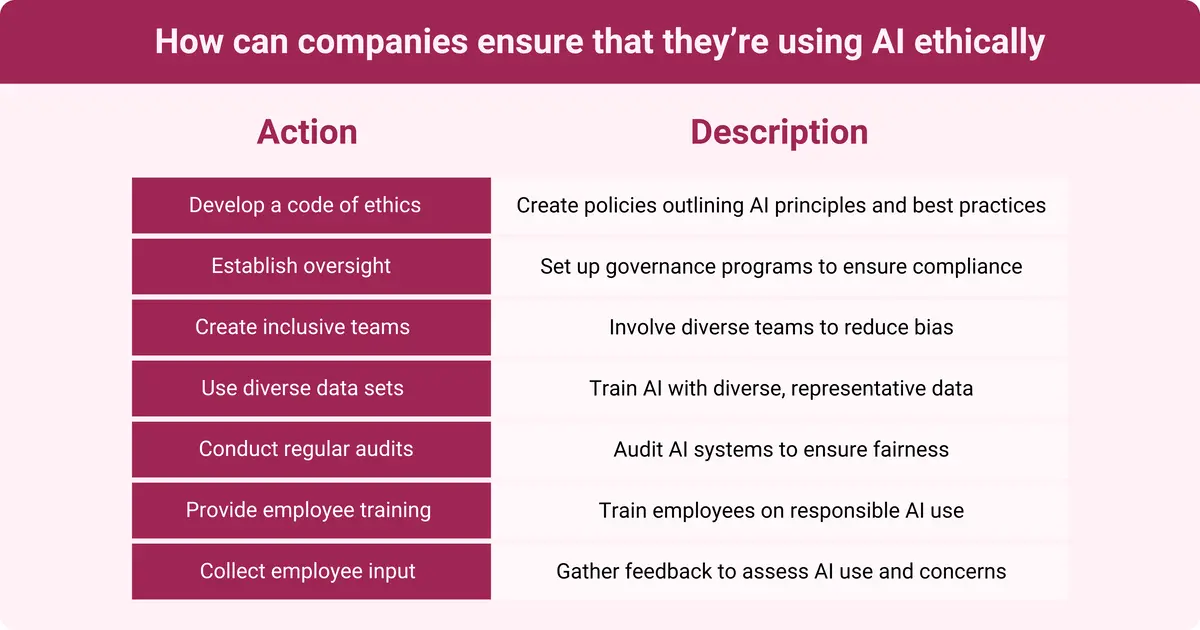

How can companies ensure that they’re using artificial intelligence ethically?

Ethically training and implementing AI helps minimize the risks that come with AI adoption. For example, programmers can make a point to use diverse datasets and monitor the system for issues with bias.

From an organizational perspective, the goal is to be clear and open about how and why your organization is using AI. These are specific ways you can clarify those points and achieve ethical AI use in your business:

- Develop a code of ethics: Write a set of policies your company will follow when using AI — essentially an internal AI code that clarifies standards and expectations. Cover the core principles, assess AI risks specific to your organization, and establish best practice guidelines.

- Establish oversight: Develop a governance program to oversee compliance and guide decision-making across all AI programs your organization uses. For example, some organizations have formed ethics committees to monitor AI use.

- Create inclusive teams: Involve people from different backgrounds in every aspect of your AI processes, from development to deployment. They will contribute unique perspectives and help reduce bias.

- Use diverse data sets: Train your AI using diverse data accurately representative of the real world. According to MIT News, programmers can also balance data by removing skewed data points to lower the risk of biased and discriminatory outcomes, as highlighted in their news article ‘Researchers reduce bias in AI models while preserving or improving accuracyOpens in a new tab’.

- Conduct regular audits: Periodically audit systems to detect and address bias and other ethical issues. Break down the AI's output, compare it to other similar scenarios, and evaluate it in terms of fairness, particularly when looking at characteristics such as gender or race.

- Provide employee training: Teach employees about AI use and ethical considerations. Explain how to use it responsibly, review best practices, and highlight potential problems staff should look out for when using AI tools.

- Collect employee input: Periodically check in with your team to see how they feel about using AI, confirm they're using it appropriately, and address their concerns. Building a feedback culture for AI and other aspects of your organization fosters confidence.

Through each of these steps, remember that problems can emerge at any stage of the AI lifecycle. As a result, organizations should employ ethical strategies at all points - from development to deployment to monitoring - to ensure AI use respects human rights throughout its lifecycle.

How can we ensure data privacy and security with AI?

Data security and privacy are always concerns when using AI, but you have the power to protect your employees, consumers, and other stakeholders.

First, take strong measures to prevent unauthorized access to data, such as using encryption and cybersecurity tools. In addition, continuously monitor activity within your AI systems to watch for potential breaches or flaws.

Another crucial step is to develop a usage policy defining how employees can use AI and what information they can enter. Minimize the data you use as much as possible, only collecting and storing information necessary for the AI to operate. Adding unnecessary data creates a greater risk with no benefit.

With that in mind, allow people to opt out of having their personal information entered into an AI system whenever possible. If the situation allows it, consider anonymizing some data points, particularly before using them for AI training.

Finally, remember these same principles apply when working with a third-party AI tool. Before partnering with a provider, look closely at their privacy policies and security strategies. If they aren't transparent about how they gather, use, and store data, seek an alternative option.

Conclusion and implications

AI ethics is uncharted territory for many companies. They've just started using AI tools and haven't had the time to consider the larger implications of this transformative technology. While AI systems can be efficient, inspiring, and even fun, they're far from perfect.

If the current pattern continues, human-AI collaboration will play a central role in business, education, and everyday life moving forward. As it becomes more ingrained in these activities, the need for an ethical mindset becomes even greater. AI can help your business do great things — as long as you use it responsibly.

About the author

Ryan Stoltz

Ryan is a search marketing manager and content strategist at Workhuman where he writes on the next evolution of the workplace. Outside of the workplace, he's a diehard 49ers fan, comedy junkie, and has trouble avoiding sweets on a nightly basis.